Data Privacy

Importantly, businesses need to follow pertinent regulations around information privacy, or PII (personally identifiable information). There are particular laws that govern entities–for instance the GDPR (general data protection regulation) in the European Union, and the CCPA (California Consumer Privacy Act) in California. These regulations dictate how software companies and platforms protect the identity of users. Consider how major companies like Google have been fined in Europe for not protecting the identities of their consumers.

But the influx of LLMs complicates matters. These machines are essentially black boxes–there is an input of data and a resulting output. In a use-case scenario, the machine would need to be trained by receiving relevant company data. But some of that information may be private and cannot leak outside the company. How do companies protect that information?

In response to just this sort of threat, many banks have completely shut off access to OpenAI because they don't know whether employee use of the platform will affect compliance with privacy regulations. This is a reasonable fear considering that they could be meaningfully fined and even lose their business if there were to be a privacy breach. Those banks will not enable OpenAI until they have vetted solutions that would enable them to be sure that they can comply with all regulations.

However, there is both a threat and an opportunity here.

For attackers, there is a potential to carve out and scrap private information, whether it's because of an intention to disrupt the company’s code base or whether it’s due to negligence on the company end. Where there are two opportunities for attack–at both the input and output levels–attackers can either hack at the entrance or recoup private data at the output.

The upshot is that somebody needs to protect against this dual threat by providing tools or a solution. That solution would require anonymizing the data that is entered into the machine while also making sure that any data that is pushed out of the machine is kept private, in other words, both an access solution (for who can ingest and digest data) and leakage protection (to make sure no private or critical data is leaked) solution are required.

To some degree, traditional data security tools can help. A well-known solution that has been in use for over 20 years is DLP, or data loss protection. Whenever there is an exchange of information, DLP software can protect against data leakage. With that in mind, some of the traditional players are going to try to adapt their solutions to evolving needs. One of the primary challenges in DLP has been around coverage of fully data versus sampling, given the sheer scale that needs to be protected. This is further exacerbated as it relates to prompts that cover massive data amounts, and the volume of prompts as the tools become more popular.

But there's also an opportunity for quick insight, fast-iterating startups to potentially become leaders in data protection. Certainly, some of it will need to come from the platforms themselves since they have to defend themselves. However major platforms like OpenAI and Anthropic will not necessarily be able to produce these solutions and maintain them. And that presents an opportunity for an independent company.

LLM Applications: Infrastructure and fundamental flaws

There are many applications that harness LLMs on some level. For some, an LLM is integrated into the application itself–it is internal rather than external and integral to the application’s service. The problem emerges from a lack of transparency that precludes the ability for customers to protect themselves.

SaaS companies in particular have a responsibility to their end customers, who often don’t know that the application is using Gen AI. Take Salesforce for example. Everything is packaged into a software; but like many applications, it is beginning to incorporate Gen AI.

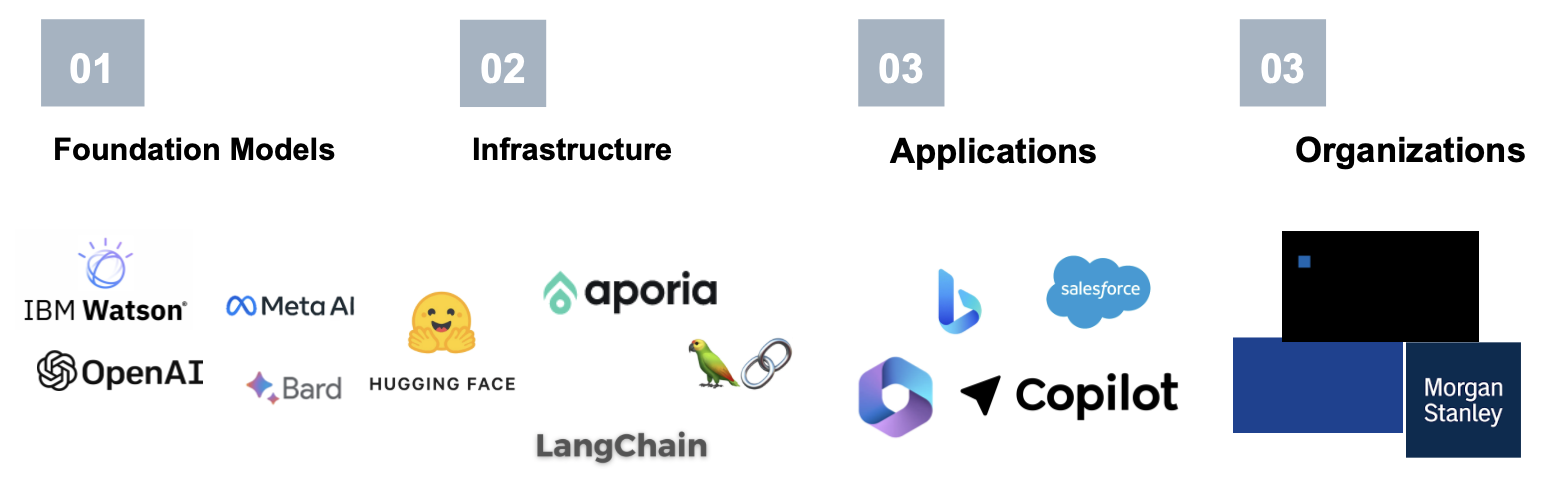

Within the larger ecosystem, there are quite a few companies that are trying to secure either the LLM or the LLM engine environment.

Of the companies in this figure, the first category represents the foundational model providers themselves. Those come in the form of an engine with CLI/API interface. Their Gen AI is inherent to the service or app, and they are tasked with providing the security measures related to their (first party) service.

Category 2 in the image above, represents infrastructure providers such as observability and developer tools, which help develop applications using Gen AI foundational models in an easier to digest, easier to monitor and more secure way.

As we think through the security shared responsibilities model, the pure-play opportunity for security vendors in servicing category 1 (and to a certain degree, category 2 vendors), is likely limited. For the most part, these vendors will depend on internal resources to develop the security capabilities, coupled with external service providers to pentest and make sure their services are tried and tested.

The onus is on each individual platform to make sure that its underlying infrastructure is secure. And this is where it is important to think about the possibility of fundamental flaws in the platform itself. Can the platform be penetrated or hacked?

Categories 3 and 4 represent the providers of applications which would use Gen AI under their hood. This means that either SaaS applications or other organizational apps, can consolidate some of the interactions between the end customers and Open AI, and they've packaged everything into software like Copilot or Auto GPT. From that perspective, a Gen AI engine is wrapped inside an application or interfaces with it through calls. The complexity stemming from this is that the underlying Gen AI is decoupled, and sometimes masked from the end user.

From a security perspective, there's only so much that SaaS vendors and the broader enterprise organizations can do to protect themselves on a standalone basis. Traditionally, security specialists come in and provide protection for these companies, whether it's privacy or security. SaaS vendors are particularly an interesting case for Gen AI security, since most security solutions that protect against security flaws cater to the end customers of those SaaS vendors and not the SaaS vendors themselves. In this way, the infrastructure is set up to place the burden of protection on the user rather than the platform. Yet many users are unaware that they need protection related to their SaaS footprint to begin with.

Here, the responsibility for privacy also comes into question. In a given interaction, how does the user know that the proper restrictions around private information have been put in place by all parties involved? And even more importantly, how can privacy be tracked among agents and agent orchestrators? Who is responsible for what?

Creating new tools for prevention and defense: Prompt Injection, Agent Attacks, and Privacy

Thinking about the defense side, it is important to ask how the traditional SOC (security operations center) will be impacted by these emerging threats and how the SOC will need to account for these issues. What new areas will need to be protected and how will it be done?

Preventing attacks in these models is difficult in large part because of the degree of complexity. Complicating factors include Gen AIs that exist as plugins inside applications, and apps that contain agents. In addition to being more difficult problems to solve, these packaged cases make it harder to implement prompt injection mitigation software.

Notably, these problems are unlikely to be solved using the AI itself because AIs are statistical systems. However, one way to enact agent mitigation will likely be through required permissions, that is, through the vetting of agent software and signature scanning (the use of a certified code signature). In this way, it becomes a question of who is permitted into a given space in order to control the flow of information within an organization. Each agent will likely require a set of permissions.

But how do we defend against the multi-layered forms of attack when it comes to agents and agent orchestrators? Here, there’s a layer of complexity that goes above and beyond a simple code base or anonymizing incoming information. Security sector solutions are going to have to solve the much more difficult problem of attackers harnessing agent orchestrators to discover an organization’s weak points.

LLM Gateways (not a Firewall!)

It is useful to think of solutions as a gateway, rather than a firewall. They don’t keep others out so much as control the input and output of a machine.

Traditionally, everything that comes into an organization has to go through a firewall. That model is not sustainable in a distributed system. However, with a service like OpenAI, an access and security gateway is useful as a guard between internal and external software. In that instance, it becomes possible to control the interaction between employees of a corporation and its external software, both outgoing and incoming.

In addition to anonymizing private information, it is also possible to potentially add security modules on top of that in order to recognize anomalous infiltration (or exfiltration) attempts and data leaks (Data Leak Protection, or DLP).

While gateways are viable solutions for privacy issues within interactions with external services, they are unlikely to solve the problem of injections. That’s because injections are a lot more complex–it is possible that they could pass through the gateway and get inserted into the system to create malicious output. Therefore, a separate solution would be required for injections. Agent Management, on the other hand, does not solve privacy issues but may solve injections.

What cyber security business opportunities are emerging?

The most obvious categories we’ve seen emerge in this space include platforms set to protect Gen AI use for SaaS and organizational app providers. These include model integrity, hallucination stopgaps, Gen AI / LLM Gateways, Gen AI access controllers, Privacy and PII hygiene solutions and DLP solutions. We’ve seen these gaps being filled by both new, pure play vendors and existing solutions such as Data Security vendors who are expanding their offerings to cover LLM/Gen Ai environments and applications.

Moreover, we’ve seen multiple security companies who are leveraging LLMs to either improve their solutions, for example add new automations to their existing solutions or improving their interface to include more natural interfaces with either end-users. For example, Anvilogic, aims to automate certain threat hunting capabilities in terms of the series of steps required in order to understand and hunt down a threat. In this way, there is actually automation in place in which an agent orchestrator could be used to collect and create multiple playbooks.

Other examples of ways the world of solutions is evolving is in the programs that the new platforms are creating in order to solve for the inherent new openings they create for attackers. OpenAI Security grant program is such an example. Open AI provides an API (open application interface) in which users can basically talk to it and it provides an answer. But while they have built quite a bit, they can't cover all of the forms of possible attacks. Ultimately, the responsibility to protect its customers lies with Open AI. And obviously, they would want the platform to be safe for use. However, considering all the various methods that attackers could potentially use, Open AI will need help protecting their platform. Through the OpenAI cybersecurity grant program, they will provide funding for third-party solutions from security vendors that will help them protect their platform. Just as companies will need extra protection as these technologies progress, Gen AI technologies will also need protective assistance from industries and startups.

Gen AI platforms, the security community and startups alike should also know that the only way to protect against adversaries will be an industry-wide effort to enable and secure the Gen AI blast radius and protect the new surface area that can potentially be attacked. Much like with other transformational shifts, there are various opportunities for startups and incumbents alike, but the only way the cyber security community prevails over the multitudes of adversaries and attackers is by collaborating and hardening the innovation. That’s the only way for security to evolve at the pace of innovation and of being an enabler rather than a bottleneck.

Thank you to Dan Pilewski whose research project as an intern at Cervin provided the foundation for this blog.